Portable entertainment has split into two unsatisfying extremes. AR glasses feel like oversized phone screens floating in front of your face, and VR headsets are immersive but too heavy, bulky, and isolating for everyday use. There is a desire for something that feels like a real cinema experience but can be used on a couch, in bed, on a plane, or in a café without suiting up or strapping a helmet to your face.

Xynavo is a pair of lightweight AR glasses built around lightweight immersion, private audio, and expandable functionality. It offers a 70-degree field of view and dual 4K micro-OLED displays, creating a virtual screen equivalent to more than 300 inches, yet weighs only 95g. The goal is to turn whatever you already own into a cinema-scale display you can wear, without the weight and noise of a full headset.

Designer: Xynavo

Click Here to Buy Now: $299 $499 ($200 off). Hurry, only a few units left! Raised over $199,200.

Xynavo fits into evenings at home, where couples can use a multi-device adapter to connect two pairs and share the same screen, playing on a Nintendo Switch or Steam Deck together or watching films and series side by side. Parents and children can share animated movies and family comedies, or connect a game console for interactive play, with private audio and a huge virtual screen.

Late nights or quiet weekends alone, you put on Xynavo and relax on the couch or in bed watching NBA, NFL, or UEFA Champions League games, or diving into action movies and sci-fi series. The dual 4K clarity and private audio turn it into a theater experience made just for you, without needing to dedicate a room or disturb anyone else in the house.

On planes, high-speed trains, or in hotel rooms, you connect a laptop via USB-C or the included HDMI adapter, pair a wireless keyboard, and handle email or browsing. Then you switch seamlessly to movies or games, all while the glasses stay light enough to wear for full episodes or matches without headband fatigue. The 95 g weight makes hours-long sessions feel manageable instead of exhausting.

Most AR glasses offer a narrow field of view that feels like a big phone, while Xynavo’s 70-degree FOV and dual 4K panels fill your vision with a cinema-scale scene. The high pixel density keeps text crisp and motion smooth, avoiding screen-door effects. A +2D to -6D diopter adjustment range lets many users dial in crystal-clear focus without wearing prescription glasses underneath, making the fit more comfortable.

Open-ear AR audio often leaks sound and struggles in noisy or very quiet spaces. Xynavo uses magnetic in-ear modules designed for noise isolation and zero sound leakage, keeping audio clear on trains and planes and private next to someone sleeping. That makes shared spaces and late-night use realistic, without headphones or disturbing people nearby.

Two built-in 3D split-screen modes, 3840×1080 and 1920×1080, let you watch a wider range of 3D content. A long press switches formats, while the dual 4K panels maintain depth and clarity across both modes. This flexibility means more 3D videos, apps, and playback sources work without workarounds or format hunting.

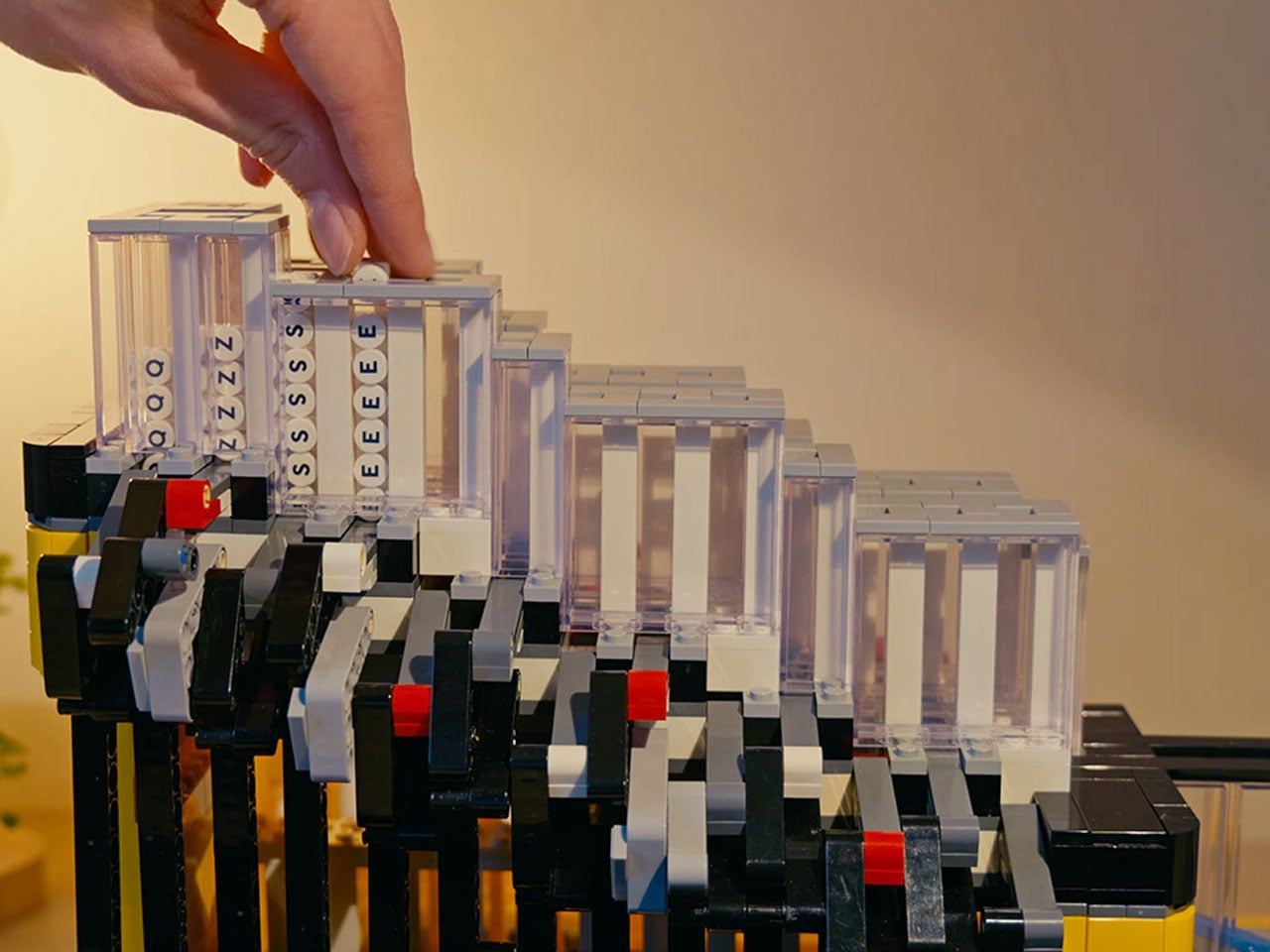

Xynavo connects to smartphones, handheld consoles, tablets, laptops, gaming systems, and PCs via its Type-C cable and included HDMI adapter, working as a plug-and-play external display without special apps or pairing. It is designed as an expandable Type-C vision platform, with support planned for external modules like cameras, night vision, and thermal imaging. That hints at a future where the same lightweight frame can grow with whatever you want to see next.

Click Here to Buy Now: $299 $499 ($200 off). Hurry, only a few units left! Raised over $199,200.

The post These 95g AR Glasses Replace VR Headsets with a 300-Inch Screen first appeared on Yanko Design.