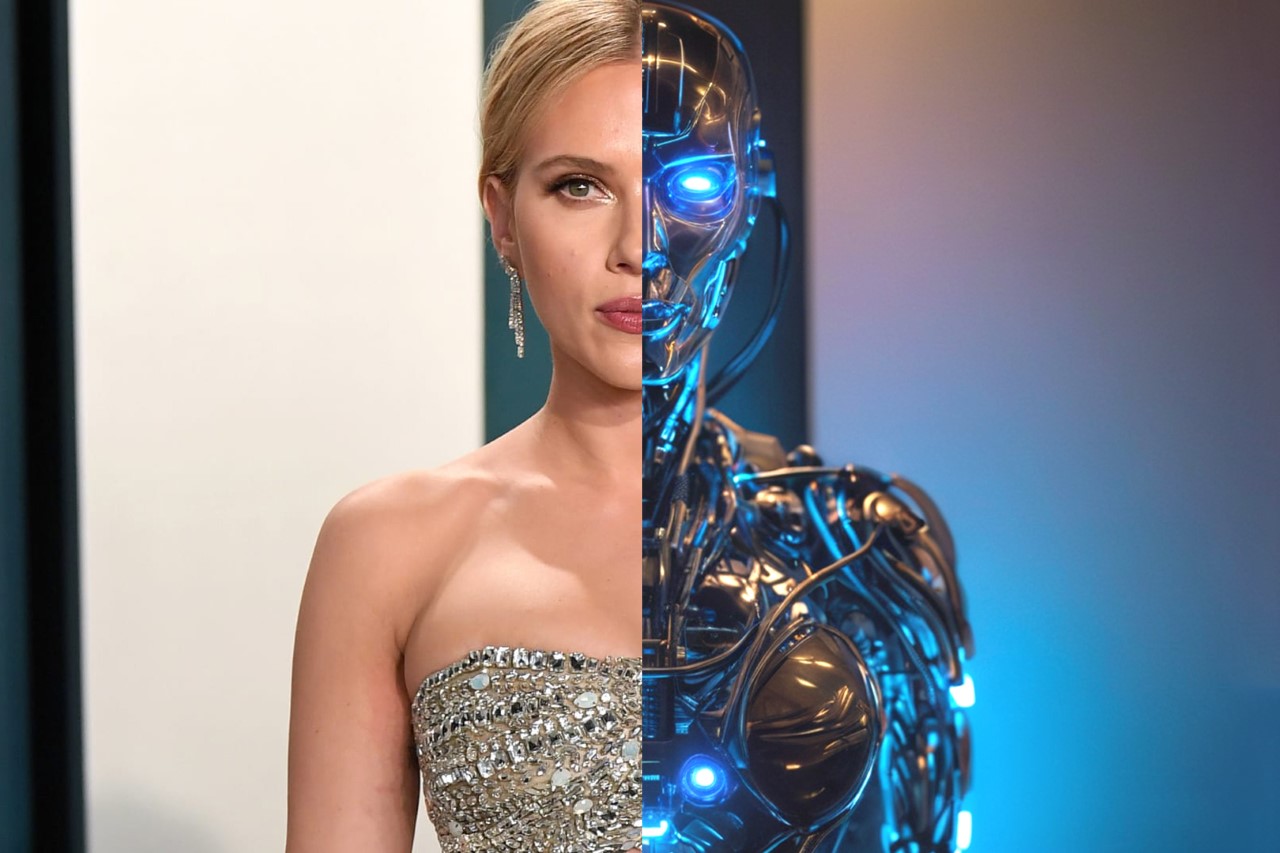

Siri, Alexa, Cortana, Google Voice ChatGPT 4o, it’s no coincidence that they all have female voices (and sometimes even names). In fact, Spike Jonze even literally called his dystopian AI-based film “Her” after the AI assistant Samantha from the movie. Played by Scarlett Johansson, the movie had a premise that sounded absurd 11 years ago but now feels all too realistic after OpenAI announced their voice-based AI model GPT 4o (omni). The announcement was also followed by an uproar from Johansson, who claimed the AI sounded a lot like her even though she hadn’t given OpenAI the permission to use her voice. Johansson mentioned that she was approached by OpenAI CEO Sam Altman to be the voice of GPT 4o, but declined. Just days before GPT 4o was announced, Altman asked her once again to reconsider, but she still declined. GPT 4o was announced exactly 10 days ago on the 13th of May, and Johansson distinctly recognized the voice as one that sounded quite similar to her own. While there are many who say that the voices don’t sound similar, it’s undeniable that OpenAI was aiming for something that sounded like Samantha from Her rather than going for a more feminine yet mechanical voice like Siri or Google Voice. All this brings a few questions to mind – Why do most AI voice assistants have female voices? How do humans perceive these voices? Why don’t you see that many male AI voice assistants (and does mansplaining have a role to play here)? And finally, do female voice assistants actually help or harm real women and gender equality in the long run? (Hint: a little bit of both, but the latter seems more daunting)

AI Voice Assistants: A History

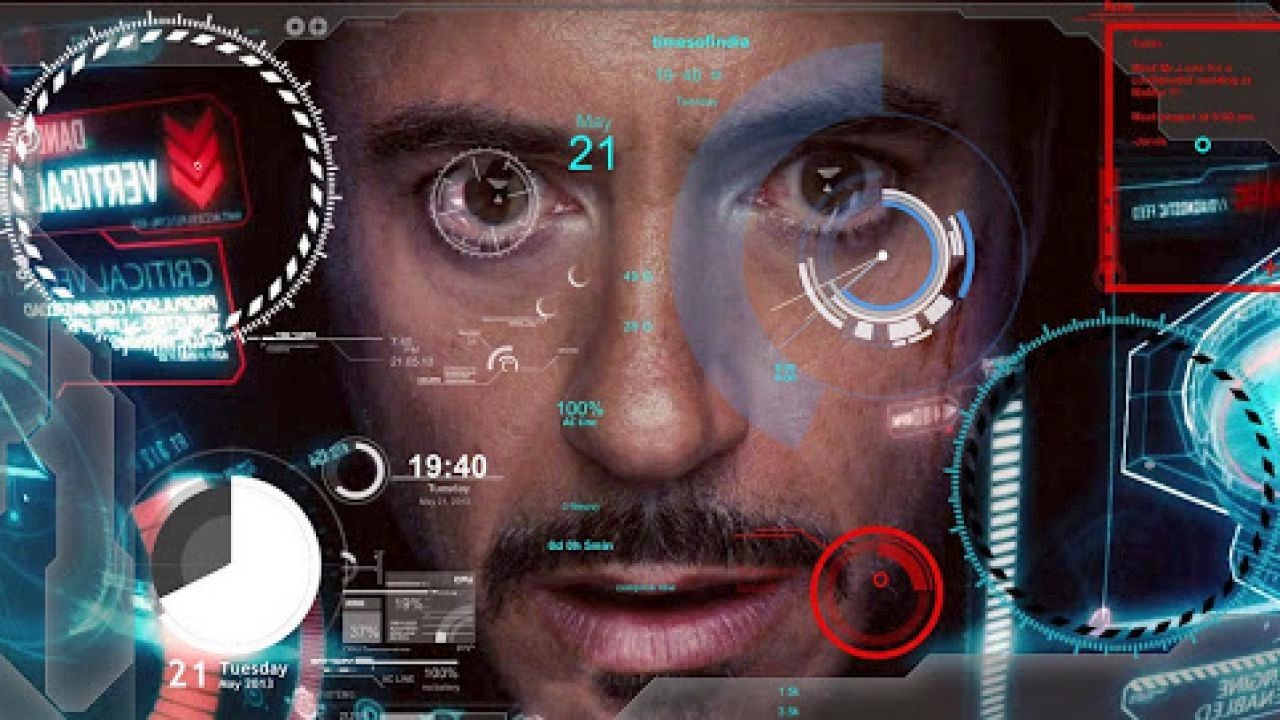

The history of AI voice assistants extends well before 2011 when Siri was first introduced to the world… however, a lot of these instances were fiction and pop-culture. Siri debuted as the first-ever voice assistant relying on AI, but you can’t really credit Siri with being the first automated female voice because for years, IVR dominated phone conversations. Do you remember the automated voices when you called a company’s service center like your bank, cable company or internet provider? Historically, a lot of times the voices were female, paving the way for Siri in 2011. In fact, this trend dates back to 1878, with Emma Nutt being the first woman telephone operator, ushering in an entirely female-dominated profession. Women operators then naturally set the stage for female-voiced IVR (Interactive Voice Response) calls. However, while IVR calls were predominantly just a set of pre-recorded responses, Siri didn’t blurt out template-ish pre-recorded sentences. She was trained on the voice of a real woman, and conversed with you (at least that time) like an actual human. The choice of a female voice for Siri was influenced by user studies and cultural factors, aiming to make the AI seem friendly and approachable. This decision was not an isolated case; it marked the beginning of a broader trend in the tech industry. In pop culture, however, the inverse was said to be true. Long before Siri in 2011, JARVIS took the stage in the 2008 movie Iron Man as a male voice assistant. Although somewhat robotic, JARVIS could do pretty much anything, like control every micro detail of Tony Stark’s house, suit, and life… and potentially even go rogue. However, that aside, studies show something very interesting about how humans perceive female voices.

JARVIS helping control Iron Man’s supersuit

Historically, Robots are Male, and Voice Assistants are Female

The predominance of female voices in AI systems is not a random occurrence. Several factors contribute to this trend:

- User Preference: Research indicates that many users find female voices more soothing and pleasant. This preference often drives the design choices of AI developers who seek to create a comfortable user experience.

- The Emotional Connection: Female voices are traditionally associated with helpful and nurturing roles. This aligns well with the purpose of many AI systems, which are designed to assist and support users in various tasks.

- Market Research: Companies often rely on market research to determine the most effective ways to engage users. Female voices have consistently tested well in these studies, leading to their widespread adoption.

- Cultural Influences: There are cultural and social influences that shape how voices are perceived. For instance, in many cultures, female voices are stereotypically associated with service roles (e.g., receptionists, customer service), which can influence design decisions.

These are but theories and studies, and the flip side is equally interesting. Physical robots are often built with male physiques and proportions given that their main job of lifting objects and moving cargo around is traditionally done by men too. Pop culture plays a massive role again, with Transformers being predominantly male, as well as Terminator, T-1000, Ultron, C3PO, Robocop, the list is endless.

What Do Studies Say on Female vs. Male AI Voices?

Numerous studies have analyzed the impact of gender in AI voices, revealing a variety of insights that help us understand user preferences and perceptions. Here’s what these studies reveal:

- Likability: Research indicates that users generally find female voices more likable. This can enhance the effectiveness of AI in customer service and support roles, where user comfort and trust are paramount.

- Comfort and Engagement: Female voices are often perceived as more comforting and engaging, which can improve user satisfaction and interaction quality. This is particularly important in applications like mental health support, where a soothing tone can make a significant difference.

- Perceived Authority: Male voices are sometimes perceived as more authoritative, which can be advantageous in contexts where a strong, commanding presence is needed, such as navigation systems or emergency alerts. However, this perception can vary widely based on individual and cultural differences.

- Task Appropriateness: The suitability of a voice can depend on the specific task or context. For example, users might prefer female voices for personal assistants who manage everyday tasks, while male voices might be preferred for financial or legal advice due to perceived authority.

- Cognitive Load: Some research suggests that the perceived ease of understanding and clarity of female voices can reduce cognitive load, making interactions with AI less mentally taxing and more intuitive for users.

- Mansplaining, A Problem: The concept of “mansplaining” — when a man explains something to someone, typically a woman, in a condescending or patronizing manner — can indirectly influence the preference for female AI voices. Male voices might be perceived as more authoritative, which can sometimes come across as condescending. A male AI voice disagreeing with you or telling you something you already know can feel much more unpleasant than a female voice doing the same thing.

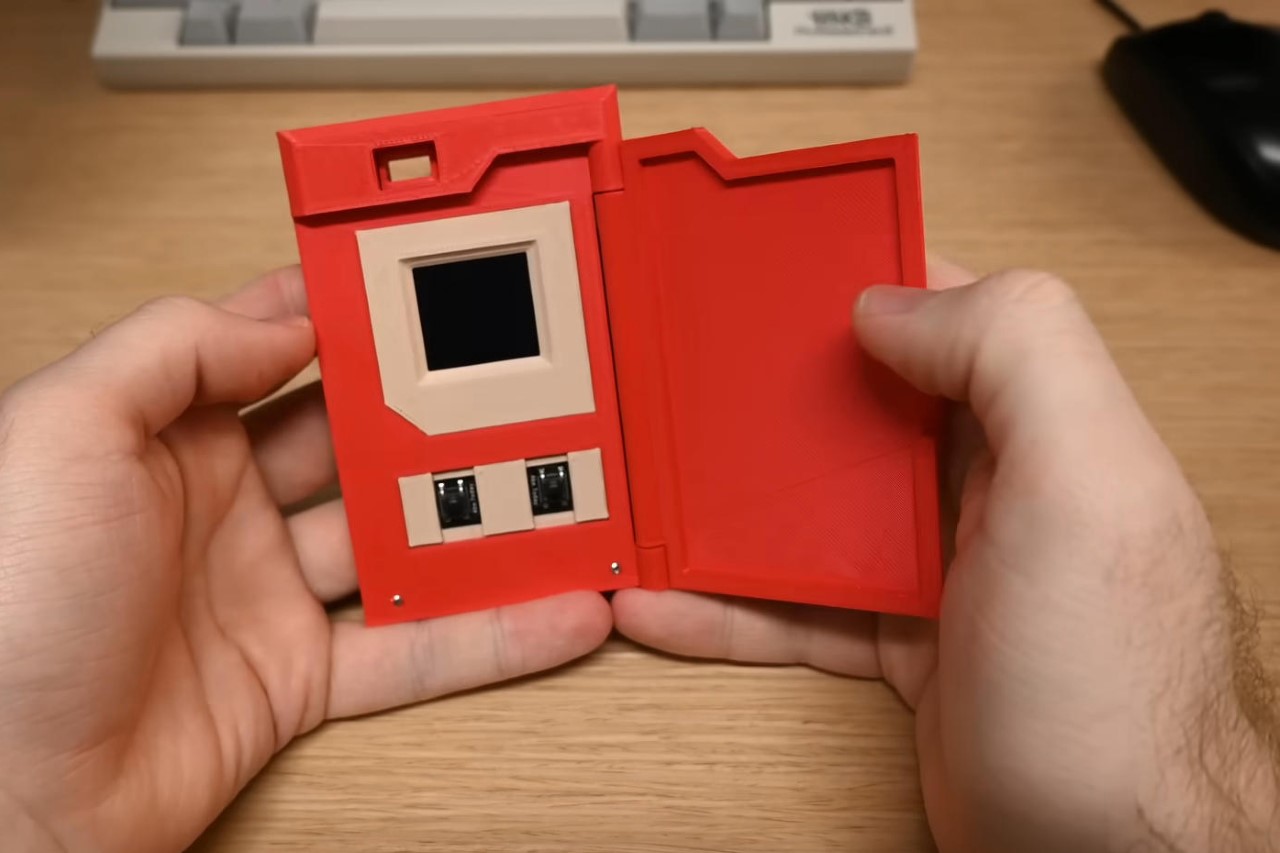

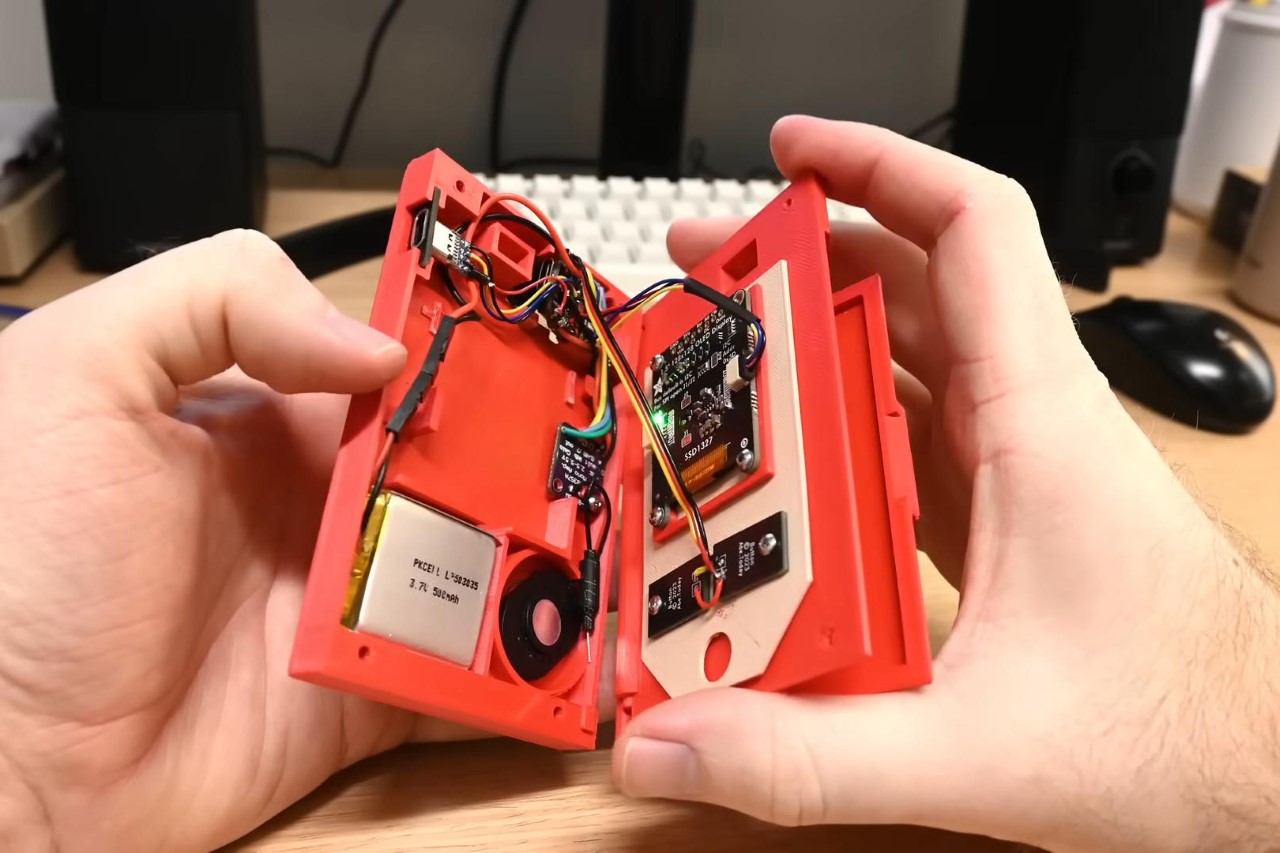

The 2013 movie Her had such a major impact on society and culture that Hong Kong-based Ricky Ma even built a humanoid version of Scarlett Johansson

Do Female AI Voices Help Women Be Taken More Seriously in the Future?

20 years back, it was virtually impossible to determine how addictive and detrimental social media was going to be to our health. We’re at the point in the road where we should be thinking of the implications of AI. Sure, the obvious discussion is about how AI could replace us, flood the airwaves with potential misinformation, and make humans dumb and ineffective… but before that, let’s just focus on the social impact of these voices, and what they do for us and the generations to come. There are a few positive impacts to this trend:

- Normalization of Female Authority: Regular exposure to female voices in authoritative and knowledgeable roles can help normalize the idea of women in leadership positions. This can contribute to greater acceptance of women in such roles across various sectors.

- Shifting Perceptions: Hearing female voices associated with expertise and assistance can subtly shift societal perceptions, challenging stereotypes and reducing gender biases.

- Role Models: AI systems with confident and competent female voices can serve as virtual role models, demonstrating that these traits are not exclusive to men and can be embodied by women as well.

However, the impact of this trend depends on the quality and neutrality of the AI’s responses, which is doubtful at best. If female-voiced AI systems consistently deliver accurate and helpful information, they can enhance the credibility of women in technology and authoritative roles… but what about the opposite?

Female AI Voices Running on Male-biased Databases

The obvious problem, however, is that these AI assistants are still, more often than not, coded by men who may bring their own subtle (or obvious) biases into how these AI bots operate. Moreover, a vast corpus of databases fed into these AI LLMs (Large Language Models) is created by men. Historically, culture, literature, politics, and science, have all been dominated by men for centuries, with women only very recently playing a larger and more visible role in contributing to these fields. All this has a distinct and noticeable effect on how the AI thinks and operates. Having a female voice doesn’t change that – it actually has a more unintended negative effect.

There’s really no problem when the AI is working with hard facts… but it becomes an issue when the AI needs to share opinions. Biases can undermine an AI’s credibility, can cause problems by not accurately representing the women it’s supposed to, can promote wrong stereotypes, and even reinforce biases. We’re already noticing the massive spike in the usage of words like ‘delve’ and ‘testament’ because of how often AI LLMs use them – think about all the stuff we CAN’T see, and how it may affect life and society a decade from now.

In 2014, Alex Garland’s Ex Machina showed how a lifelike female robot passed the Turing Test and won the heart of a young engineer

The Future of AI Voice Assistants

I’m no coder/engineer, but here’s where AI voice assistants should be headed and what steps should be taken:

- Diverse Training Data: Ensuring that training data is diverse and inclusive can help mitigate biases. This involves sourcing data from a wide range of contexts and ensuring a balanced representation of different genders and perspectives.

- Bias Detection and Mitigation: Implementing robust mechanisms for detecting and mitigating bias in AI systems is crucial. This includes using algorithms designed to identify and correct biases in training data and outputs.

- Inclusive Design: Involving diverse teams in the design and development of AI systems can help ensure that different perspectives are considered, leading to more balanced and fair AI systems.

- Continuous Monitoring: AI systems should be continuously monitored and updated to address any emerging biases. This requires ongoing evaluation and refinement of both the training data and the AI algorithms.

- User Feedback: Incorporating user feedback can help identify biases and areas for improvement. Users can provide valuable insights into how the AI is perceived and where it might be falling short in terms of fairness and inclusivity.

AI assistants aren’t going anywhere. There was a time not too long ago when it seemed that AI assistants were dead. In the end of 2022, Amazon announced that Alexa had racked up $10 billion in debt and seemed like a failed endeavor – that same month, ChatGPT made its debut. Cut to today and AI assistants have suddenly become mainstream again. Mainstream in a way that almost every company and startup is looking for ways to integrate AI into their products and services. Siri and GPT 4o are just the beginning of this new female voice-led frontier… it’s important we understand the pitfalls and avoid them before it’s too late. After all, if you remember in the movie Terminator Salvation, Skynet was a female too…

The post Why Are Most AI Voices Female? Exploring the Reasons Behind Female AI Voice Dominance first appeared on Yanko Design.