Ray-Ban’s Meta Wayfarer glasses have quickly become the intersection of fashion and technology, combining classic style with advanced smart features. Recently, Ray-Ban and Meta unveiled the new Shiny Transparent Wayfarer, featuring exposed internal components and Clear to Sapphire Transitions lenses. While this new model pushes the boundaries of what smart glasses can look like, the big question is: should you upgrade, especially if you already own a pair? Let’s break it down.

Designer: Ray-Ban + Meta

If Money Is No Object, Then Yes—Go for It

If price isn’t a barrier, the decision to upgrade is straightforward. At $429 USD, the Shiny Transparent Wayfarer offers a visually striking design that showcases the internal technology, creating a futuristic look that stands apart from the Matte Black version. The Clear to Sapphire Transitions lenses add another layer of sophistication, adapting to light conditions and giving the glasses a sleek sapphire tint when outdoors. This is an easy yes for those who enjoy staying at the forefront of wearable tech.

If You Want the New Lens Transition, It’s Worth Considering

If your current Ray-Ban Meta Wayfarer comes with standard clear lenses or basic non-adaptive sunglasses, upgrading to the new Transitions lenses could make a big difference in how you use the glasses day-to-day. The Clear to Sapphire Transitions lenses offer a smooth transition between indoor and outdoor settings, making it easier to adapt to different lighting conditions without needing to switch eyewear. When you’re indoors, the lenses remain clear, providing a natural and unobstructed view. However, once you step outside, they automatically darken to a sleek sapphire tint, adding a touch of style and protecting your eyes from harsh sunlight. For anyone who finds themselves frequently moving between environments, this flexibility could be a major convenience.

On the other hand, if you already own a pair with Clear to Green Transitions lenses, the upgrade may not offer enough of a difference to justify the change. Both lenses provide the same adaptive functionality, adjusting to light to enhance your vision while adding a color tint. The real difference lies in the aesthetic—whether you prefer the cooler sapphire tint or the more classic green hue. If you’re satisfied with the current performance and look of your lenses, there may be little reason to make the leap unless the sapphire color truly appeals to you.

If You Want a New Design with Exposed Tech, Then Yes

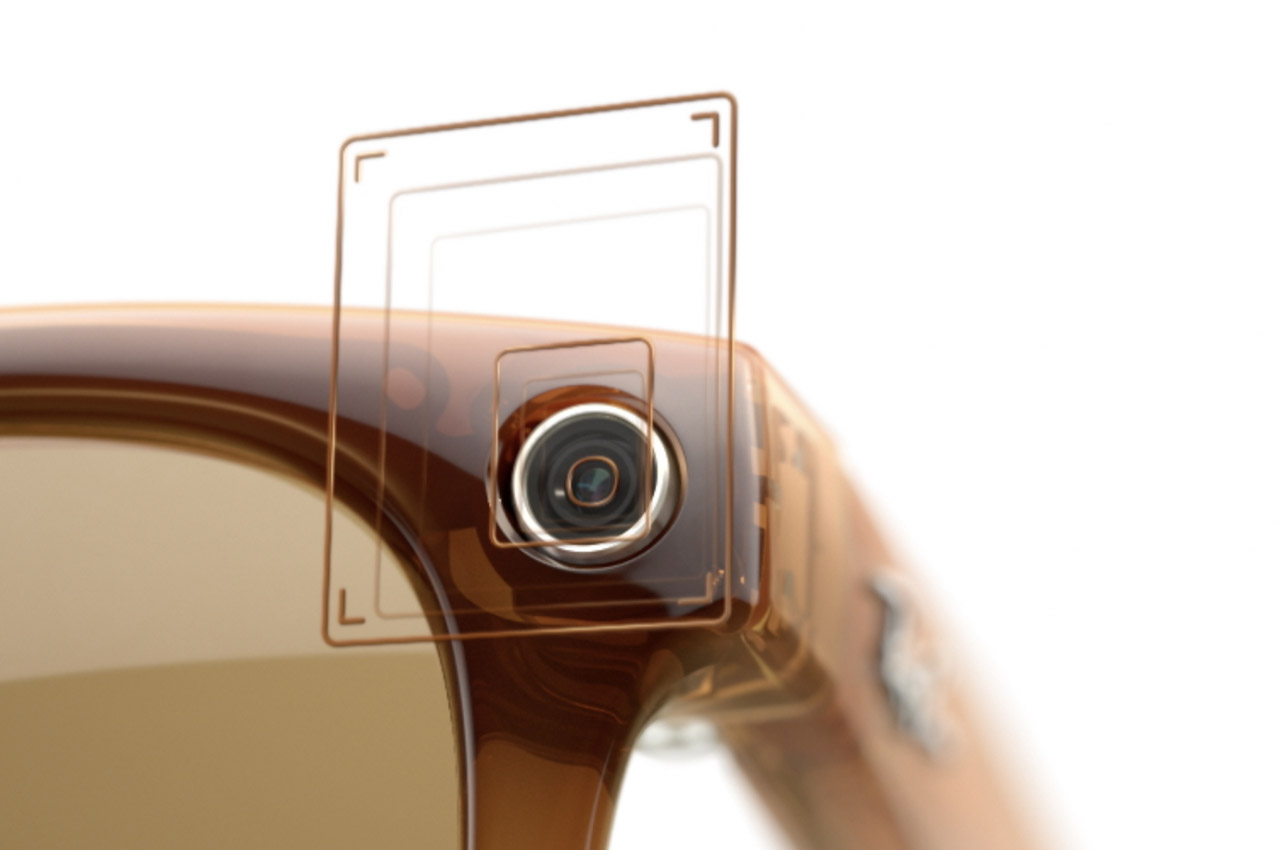

The most noticeable difference in the new model is the Shiny Transparent frame. This design exposes the inner workings of the glasses, giving them a high-tech look that contrasts with the more traditional Matte Black frame. The transparent frame brings an aesthetic shift, showcasing the cutting-edge technology that powers the glasses in a more visually pronounced way. It’s an intriguing design choice for those who appreciate a bold, futuristic look.

If you’re drawn to a more tech-forward, modern aesthetic, this new design is worth considering. The transparent frame is eye-catching and adds a fresh dimension to the Ray-Ban Meta Wayfarer collection. For those who want their eyewear to make a visual statement, the exposed components are a step forward in wearable tech design. However, if you prefer a more classic and understated look of the Matte Black Wayfarer, you might find that the new frame doesn’t offer enough reason to make the switch.

For Me, It’s a Hard No

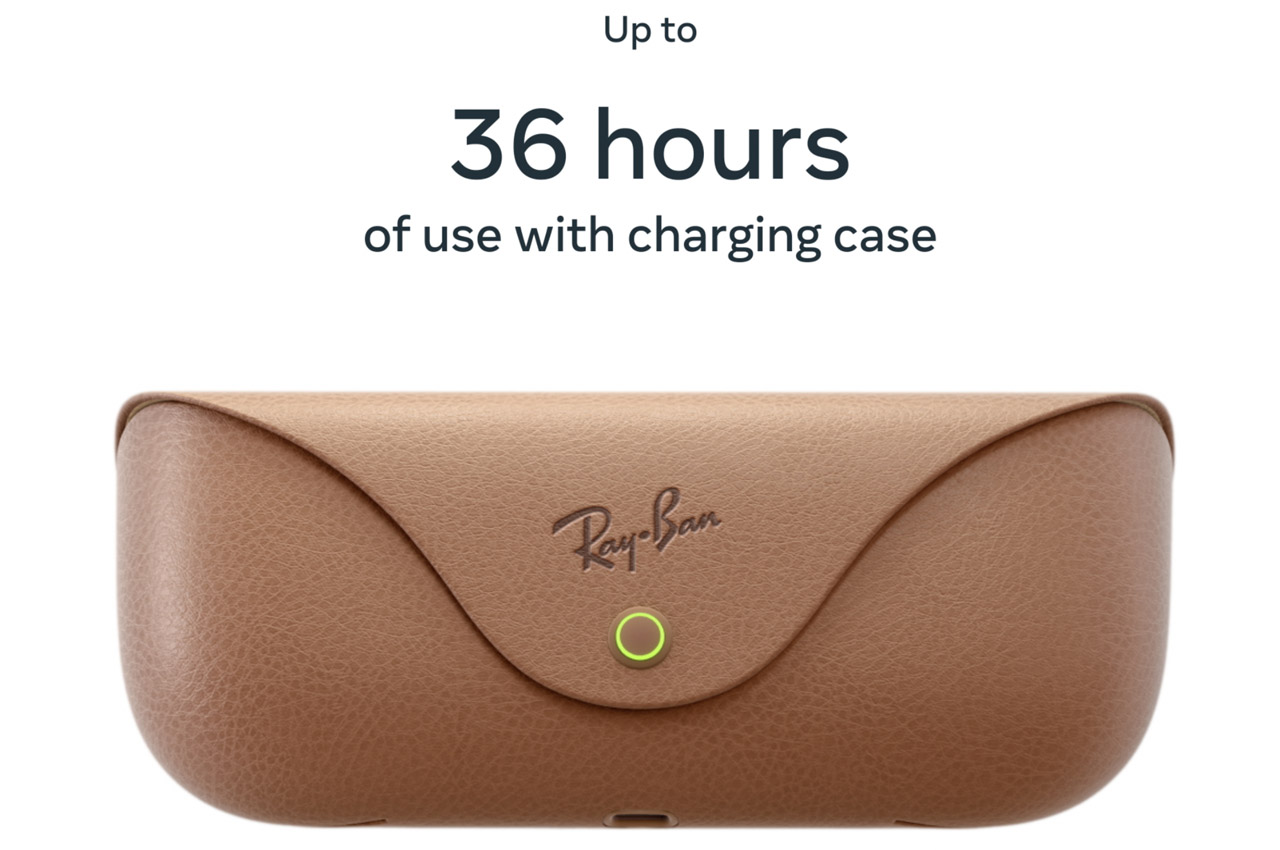

For anyone who already owns the Matte Black Wayfarer with Clear to Green Transitions lenses, upgrading to the new Shiny Transparent model may not be necessary. Your current pair offers the same core features—AI-powered assistance, a 12MP camera, open-ear speakers, and a touchpad for easy control. The Clear to Green Transitions lenses provide excellent functionality, and if you’re happy with the design and tech you already have, there’s no pressing need to make the switch.

The Introduction of AI-Powered Features

With the recent updates, Ray-Ban and Meta have significantly improved the AI capabilities of the glasses. Now, you can use voice commands by simply saying “Hey Meta” and follow up with additional commands without repeating the wake word. The glasses can also remember important details like where you parked your car or set reminders for when you land after a flight. The ability to send voice messages via WhatsApp or Messenger while your hands are occupied adds an extra layer of convenience for staying connected on the go.

One of the more impressive AI features is real-time video assistance. Whether you’re exploring a new city or browsing the aisles of a grocery store, Meta AI can offer real-time help by identifying landmarks or suggesting meals based on the ingredients you’re looking at. Additionally, real-time language translation for Spanish, French, and Italian can remove language barriers, and future updates will likely support more languages.

Expanding Partnerships with Major Platforms

The glasses also support deeper integrations with platforms like Spotify and Amazon Music, but Ray-Ban has expanded these offerings to include Audible and iHeart as well. Now, you can use voice commands to search and play music or audiobooks without touching your phone. This makes the listening experience even more seamless, allowing you to ask questions like “What album is this from?” while on the move. These expanded partnerships deepen the glasses’ role in day-to-day media consumption.

The collaboration with Be My Eyes is another significant step in making the glasses more accessible. This app, designed for individuals who are blind or have low vision, pairs users with sighted volunteers who provide real-time assistance. The glasses’ camera allows the volunteer to see what the wearer sees, enabling them to help with tasks like reading mail or navigating new environments.

Are You Going for It?

Ultimately, the decision to upgrade comes down to personal preference and how much you value the new design and lens options. If money isn’t an issue or you’re drawn to the transparent frame and sapphire lenses, the upgrade makes sense. However, if you’re content with your current Matte Black Wayfarer with Clear to Green Transitions lenses, there’s no pressing reason to switch. The new features and design are exciting, but your existing pair still holds up as a stylish, highly functional piece of wearable tech.

The post Should you upgrade to the new Ray-Ban Meta Wayfarer Limited-Edition Transparent Model? first appeared on Yanko Design.