Apple Intelligence is a revolutionary step in making your devices smarter and more in tune with your daily life. Imagine having an assistant that not only responds to your commands but also understands your needs and habits. Unlike traditional AI, which relies on generic data, Apple Intelligence integrates deeply with your personal context. It knows your routine, preferences, and relationships, making interactions more meaningful and tailored specifically to you.

What is Apple Intelligence?

Apple Intelligence redefines how you interact with technology, making it feel like a natural extension of yourself. Whether managing your schedule, writing emails, or capturing moments, the intelligence within your devices anticipates your needs and offers solutions proactively. Moreover, it’s built with privacy at its core, ensuring your personal data remains secure and confidential. In an era where privacy concerns are paramount, Apple Intelligence sets a new standard by processing most tasks directly on your device and using private cloud computing only when necessary.

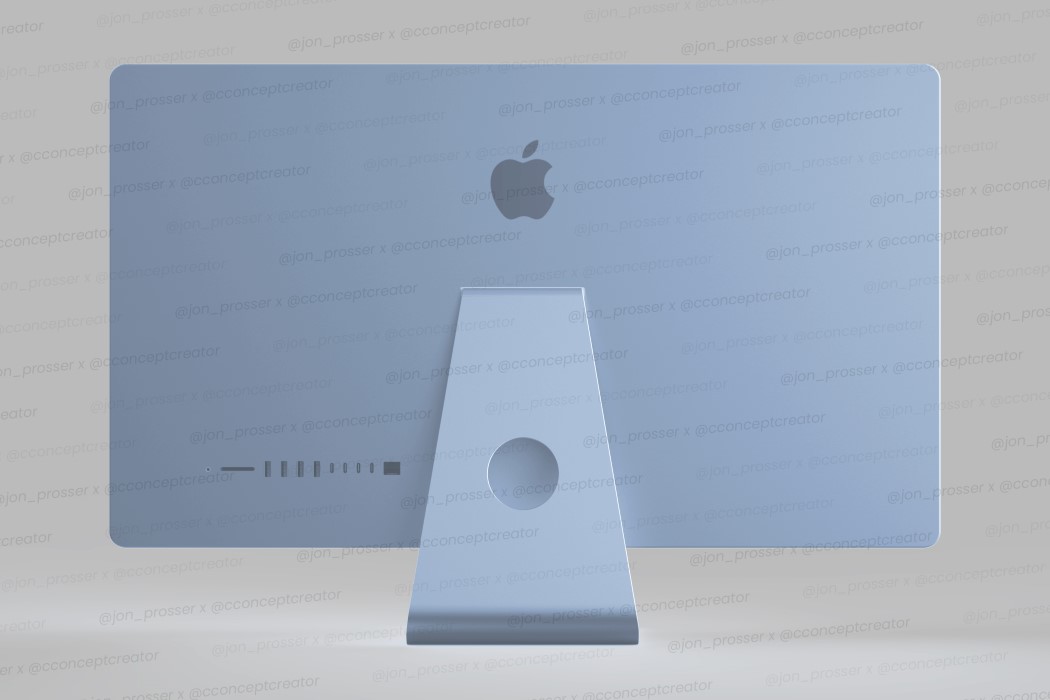

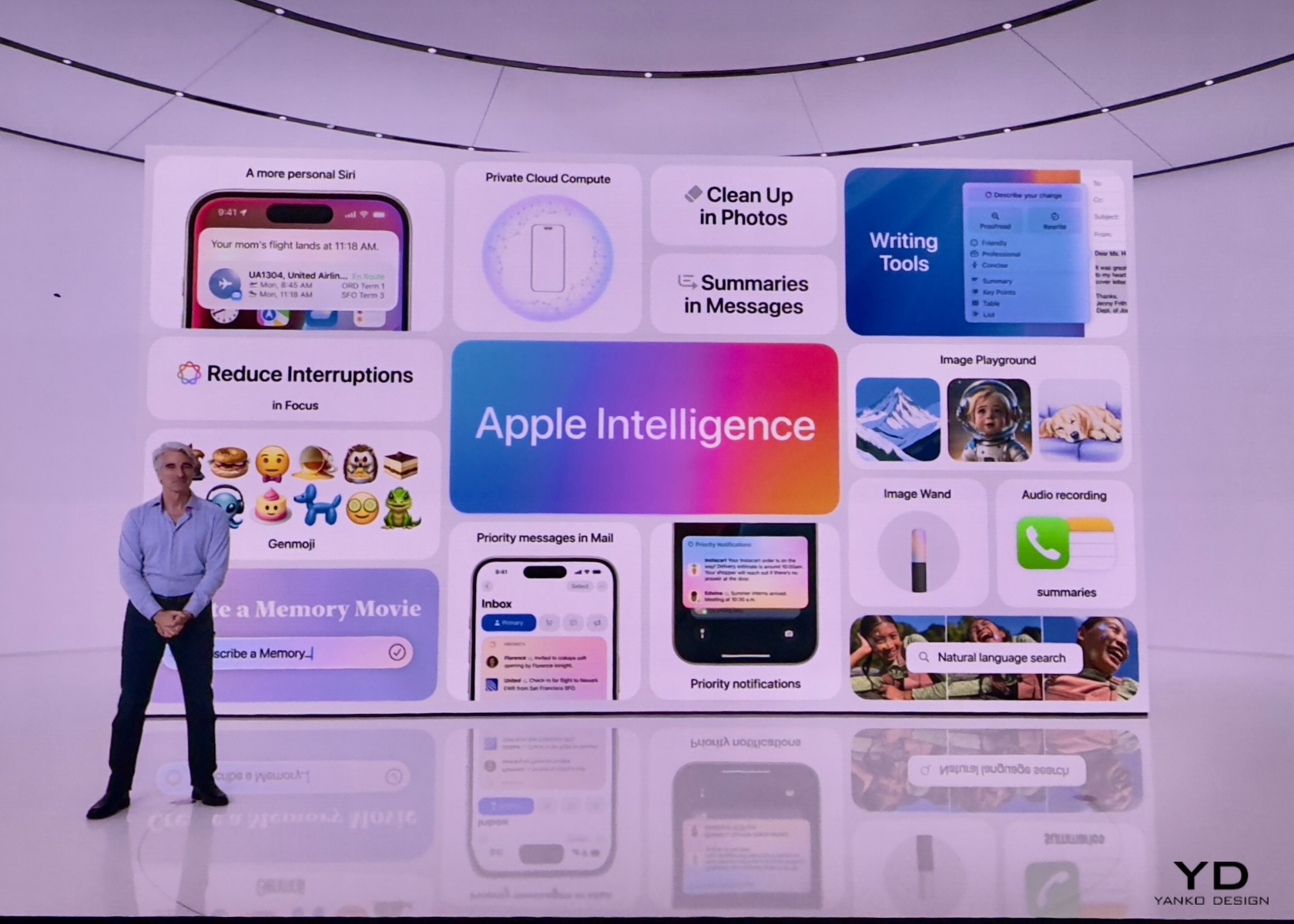

Apple Intelligence

Apple Intelligence is designed to simplify tasks, anticipate your needs, and provide relevant suggestions, all while keeping your data secure. This transformation goes beyond adding new features; it’s about enhancing your user experience. For busy professionals, students, and families, having a device that understands and adapts to your unique context can streamline daily tasks, reduce stress, and enhance productivity. By prioritizing user privacy, Apple Intelligence also addresses growing concerns about data security, making it a trustworthy assistant in the digital age.

Is Apple Intelligence the First True GenAI Ecosystem That’s Secure?

Absolutely. Apple has set the bar high with the first truly secure generative AI ecosystem. Apple Intelligence ensures that your data remains private by performing most of the processing on your device. For more complex tasks that require additional computing power, it uses Private Cloud Compute. This innovative approach allows Apple to harness the full potential of generative AI while ensuring that your personal data is never exposed or compromised.

Apple’s commitment to privacy extends to its server operations as well. All server-based models run on dedicated Apple silicon servers, and the code is open to independent inspection. This means you can trust that your information is handled with the utmost care and transparency. By combining cutting-edge AI capabilities with rigorous privacy standards, Apple Intelligence delivers powerful, personalized intelligence without sacrificing security.

The importance of this secure ecosystem cannot be overstated. In a time where data breaches and privacy concerns are rampant, Apple Intelligence offers peace of mind. Users can confidently rely on their devices to handle sensitive information and perform complex tasks without fear of data misuse. This makes Apple Intelligence a technological advancement playing a vital development role in fostering user trust and safety in digital environments.

Elements and Features of Apple Intelligence

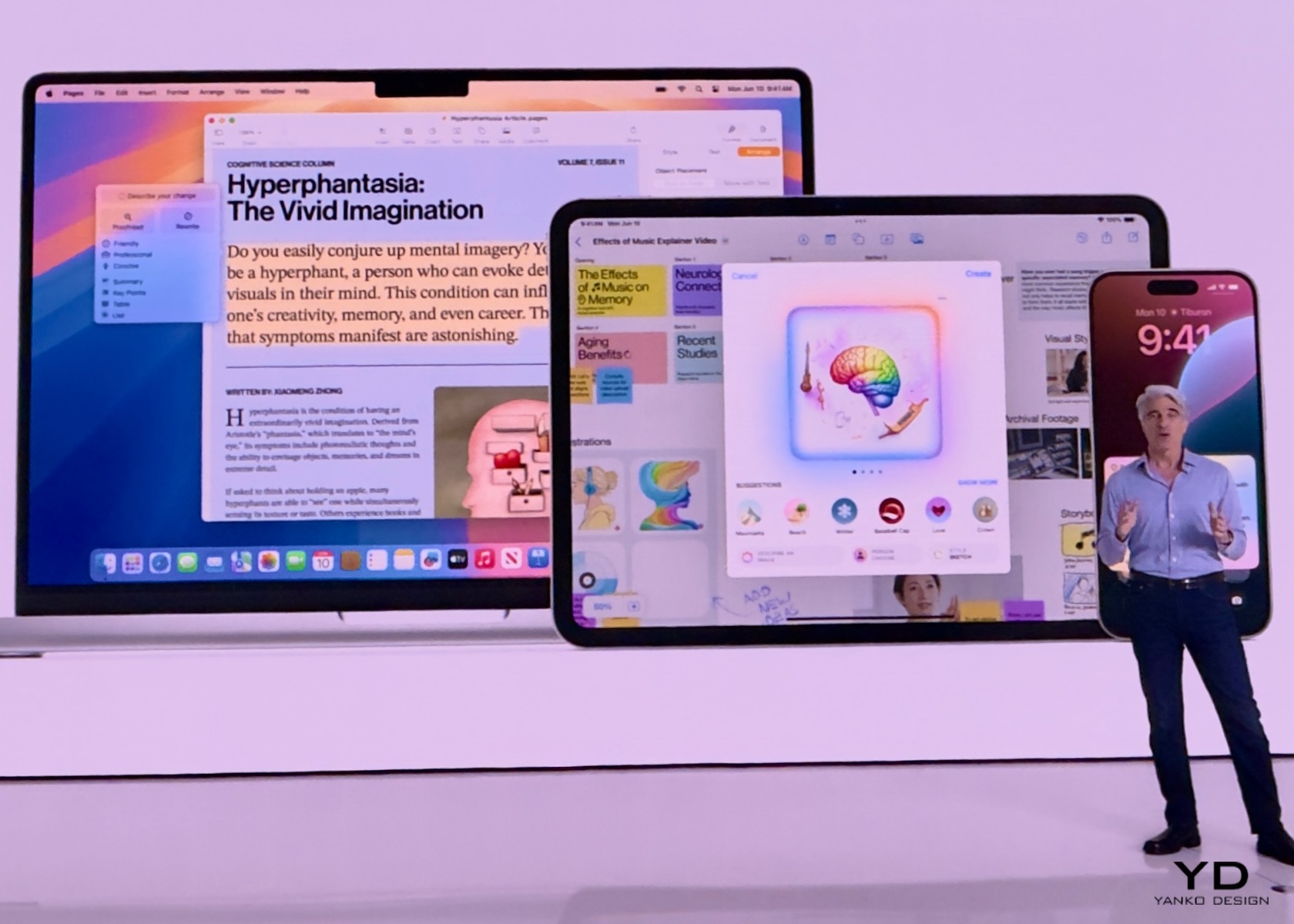

Apple Intelligence is built into your iPhone, iPad, and Mac, bringing a suite of powerful features that make everyday tasks easier and more enjoyable. Let’s dive into what this means for you.

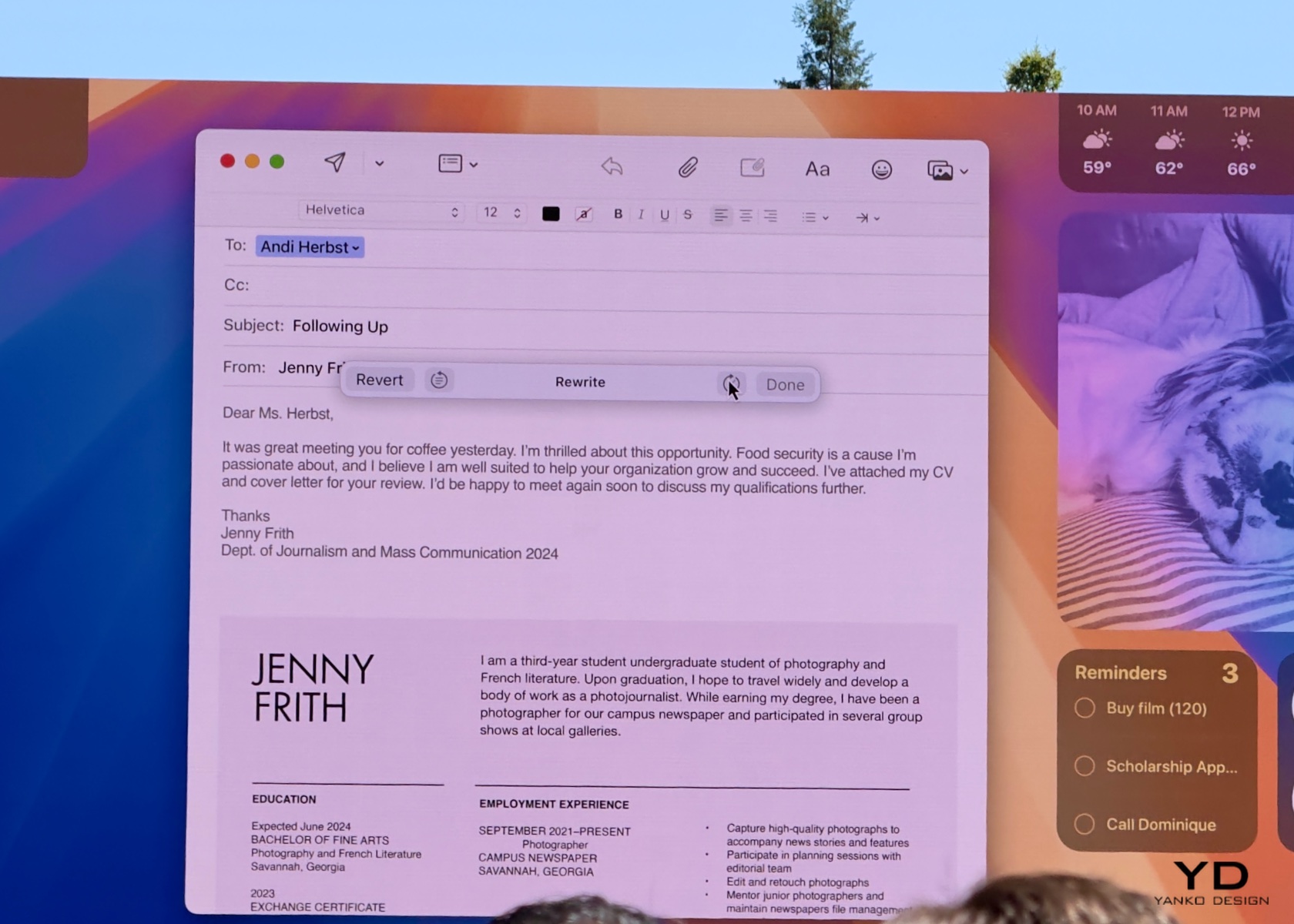

1. Writing Tools

Apple Intelligence includes systemwide writing tools that act like a personal editor wherever you write. Whether you’re drafting an email, jotting down notes, or crafting a blog post, these tools enhance your writing by providing real-time suggestions and corrections. Rewrite allows you to adjust the tone of your text to suit different audiences, whether you need to be more formal for a cover letter or add some humor to a party invitation. Proofread checks your grammar, word choice, and sentence structure, offering edits and explanations to help you improve.

Summarize is another fantastic feature, perfect for condensing long articles or documents into digestible key points or paragraphs. Imagine being able to take a lengthy research paper and quickly create a concise summary for easier review. These tools are integrated into apps like Mail, Notes, and Pages, as well as third-party apps, making them accessible whenever and wherever you need them. With Apple Intelligence, your writing becomes clearer, more precise, and tailored to any situation.

These tools are particularly useful because they enhance communication and productivity. For students, professionals, and casual users alike, having an intelligent assistant that helps refine and improve writing can save time and reduce errors. Whether you’re preparing a report, sending an important email, or simply jotting down ideas, these features ensure that your written communication is effective and polished.

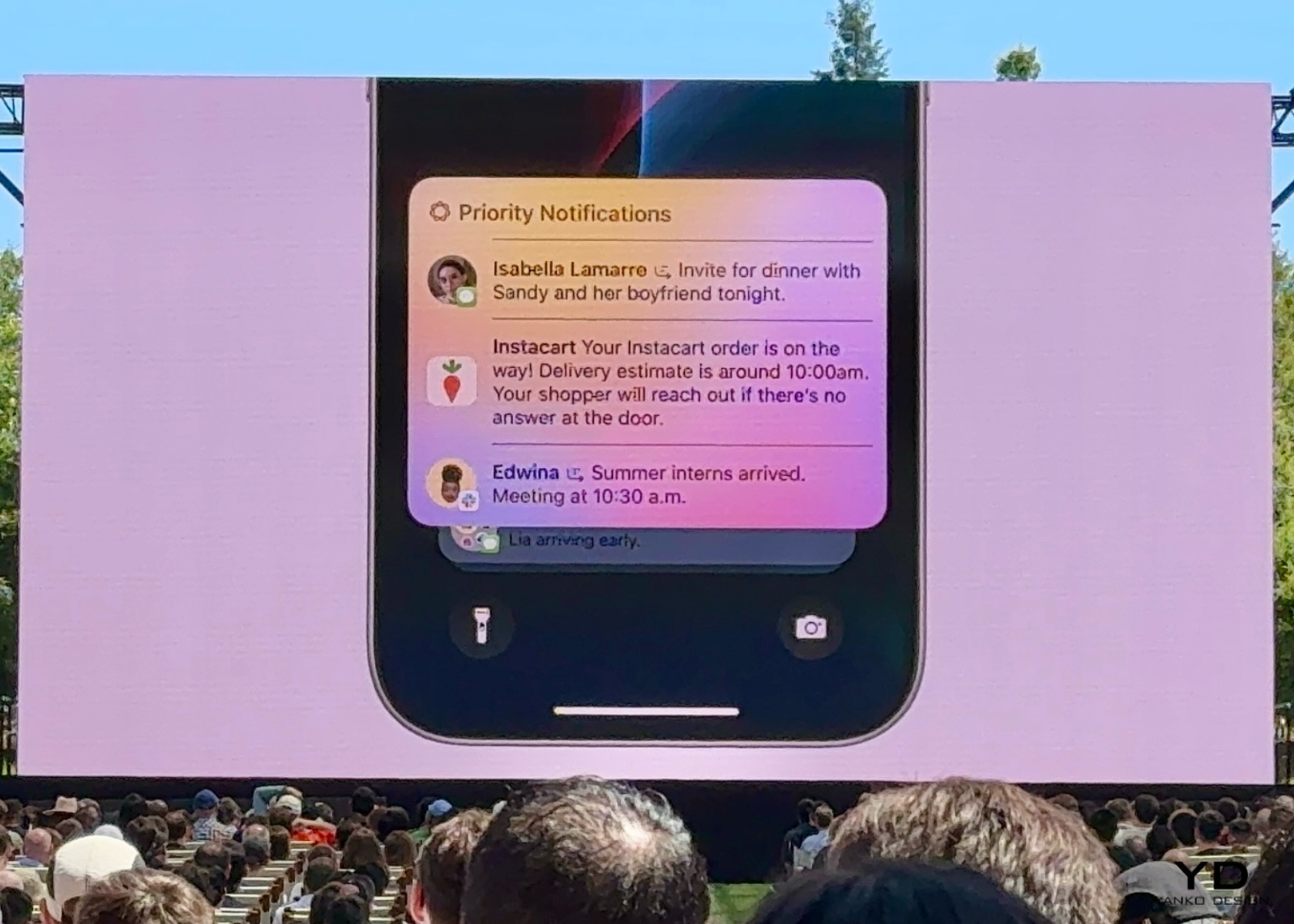

2. Priority Messages and Notifications

Managing your communications has never been easier with Apple Intelligence’s Priority Messages and Notifications. This feature ensures that you see the most important emails and notifications first. For example, a same-day dinner invitation or a boarding pass for an upcoming flight will appear at the top of your inbox, so you never miss critical information. Instead of previewing the first few lines of each email, you can see summaries that highlight the key points, making it easier to manage your inbox efficiently.

The new Reduce Interruptions Focus mode is a game-changer for those who need to stay focused on important tasks. It filters notifications to show only the ones that require immediate attention, such as a text about an early pickup from daycare. This means fewer distractions and more time to concentrate on what matters most. By prioritizing urgent messages and minimizing unnecessary interruptions, Apple Intelligence helps you stay organized and in control of your communications.

These features are crucial for anyone who juggles multiple responsibilities. By keeping you informed of the most important messages and minimizing distractions, Apple Intelligence helps improve productivity and reduce stress. Whether you’re managing work emails, family schedules, or social invitations, having a streamlined communication system ensures that you stay on top of what truly matters without getting overwhelmed by the noise.

3. Audio Recording and Transcription

In the Notes and Phone apps, Apple Intelligence introduces advanced audio recording and transcription capabilities. This feature is incredibly useful for capturing detailed notes during calls and meetings. When you record a conversation, Apple Intelligence transcribes the audio in real-time, allowing you to read and review the content immediately. This makes it easy to keep track of important discussions and decisions without missing any details.

Once the recording is finished, Apple Intelligence generates a summary of the key points, making it simple to recall and share the most important information. For instance, after a brainstorming session, you can quickly review the main ideas and action items without having to listen to the entire recording. This feature saves time and enhances productivity by ensuring that you have accurate and organized notes at your fingertips.

The importance of these features lies in their ability to enhance efficiency and accuracy. For students, professionals, and anyone who attends meetings or interviews, having an automatic transcription and summary tool can significantly reduce the time spent on note-taking and ensure that no critical information is missed. This allows you to focus more on the conversation and less on capturing every detail manually.

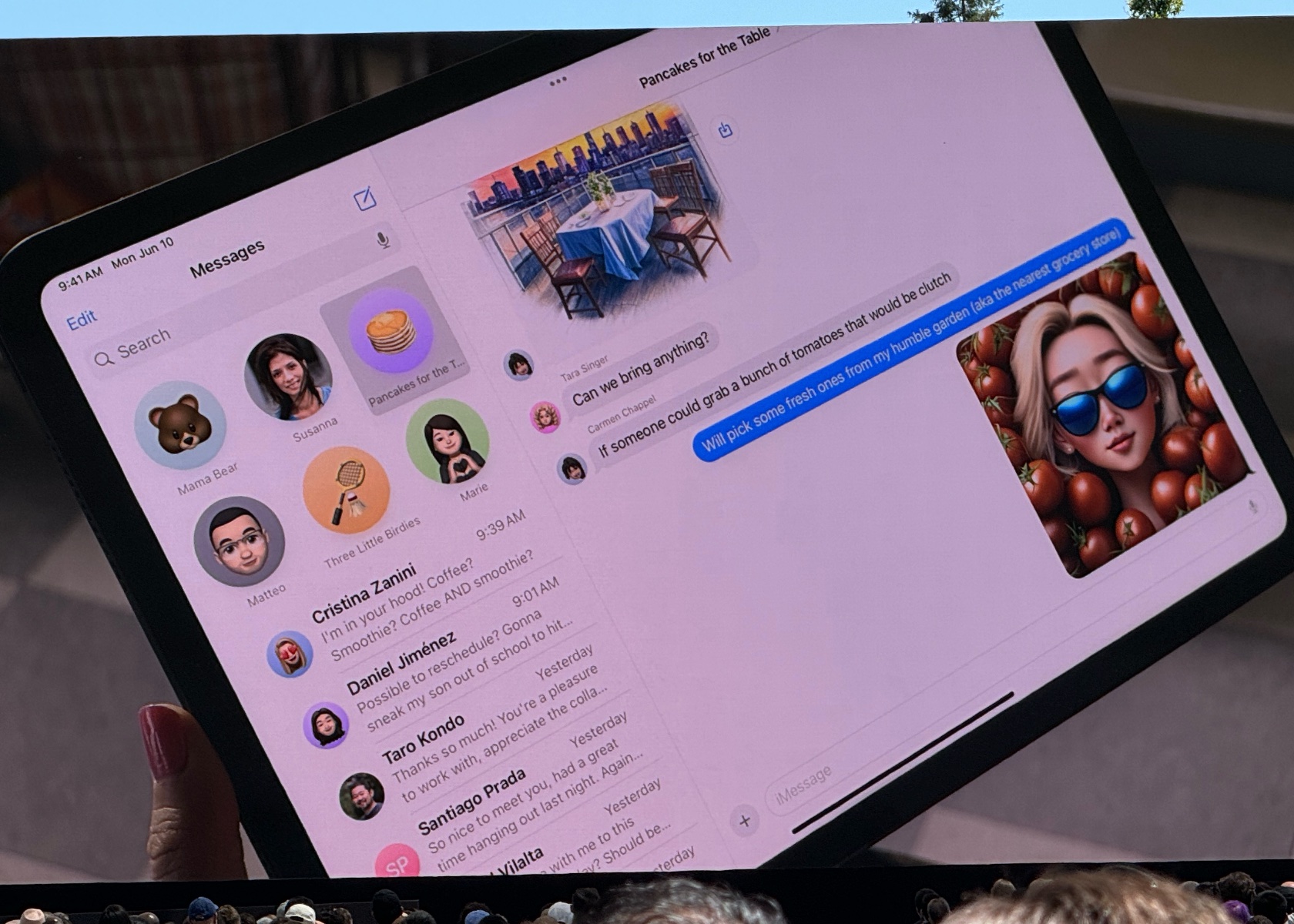

4. Image Playground and Genmoji

Image Playground and Genmoji are two exciting features powered by Apple Intelligence that make communication and self-expression more fun and creative. Image Playground lets you create engaging images in seconds, choosing from styles like Animation, Illustration, or Sketch. It’s built right into apps like Messages, so you can easily spice up your conversations with personalized visuals. Whether you’re creating an image of your family hiking or a fun meme for a group chat, the possibilities are endless.

Genmoji takes emojis to the next level by allowing you to create unique, personalized emojis based on descriptions or photos. For example, typing “smiley relaxing wearing cucumbers” generates a Genmoji that you can use in your messages or as a sticker. You can even create Genmoji of friends and family members based on their photos, adding a personal touch to your digital interactions. These features make it easier and more enjoyable to express yourself visually, whether you’re chatting with friends or creating content for social media.

These features are especially significant in today’s online interactions. Visual elements, such as images and emojis, are essential for expressing ourselves. With tools to create personalized and unique visuals, Apple Intelligence enriches our connections with others. This approach makes conversations more engaging and allows for deeper, more personal expression, ultimately fostering stronger relationships and more enjoyable interactions.

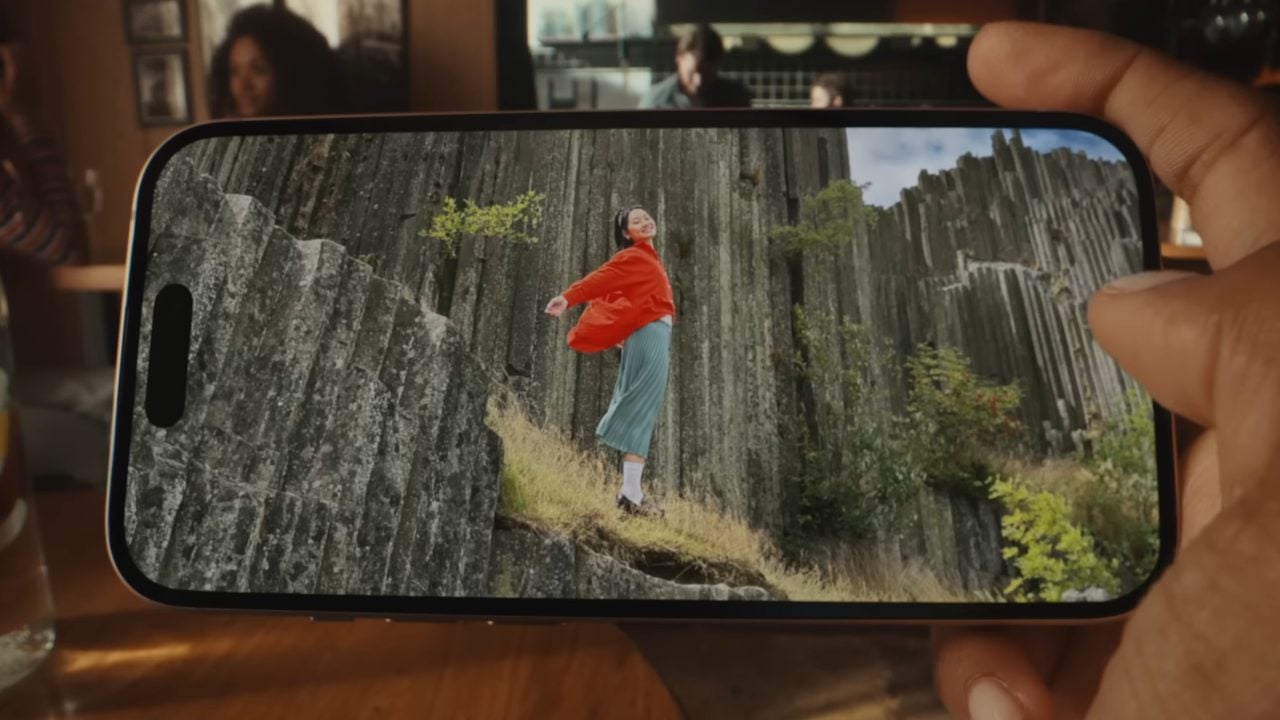

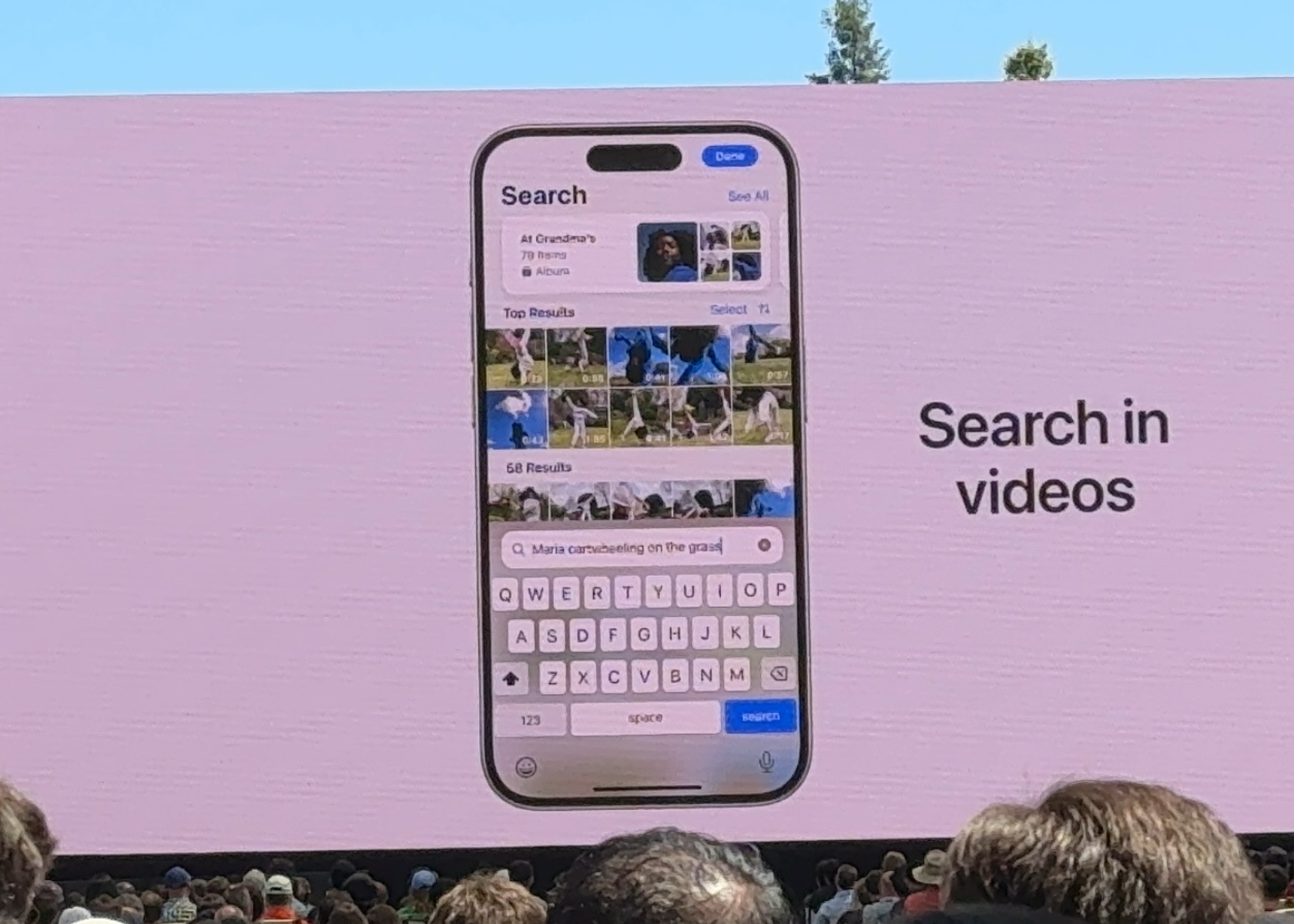

5. Enhanced Photo and Video Search

Apple Intelligence makes finding and managing your photos and videos a breeze with enhanced search and editing capabilities. Using natural language processing, you can search for specific photos and videos with simple descriptions. For example, searching for “Maya skateboarding in a tie-dye shirt” quickly pulls up the exact photo from your library. This feature saves time and makes it easier to relive your favorite moments.

The Clean Up tool is another handy feature that helps you enhance your photos by removing distracting objects from the background without altering the main subject. This is perfect for tidying up those almost-perfect shots. Additionally, the new Memories feature allows you to create movies from your photos and videos by simply typing a description. Apple Intelligence selects the best images, crafts a narrative, and even suggests music from Apple Music to match the mood. This makes it effortless to create beautiful, shareable memories from your digital collection.

These features are invaluable for everyone who captures and stores digital memories. With the sheer volume of photos and videos we accumulate, having tools that simplify searching and editing can save significant time and effort. Whether you’re organizing a photo album, creating a digital scrapbook, or just looking for that perfect picture from last summer, Apple Intelligence makes it easier to manage and enjoy your visual content.

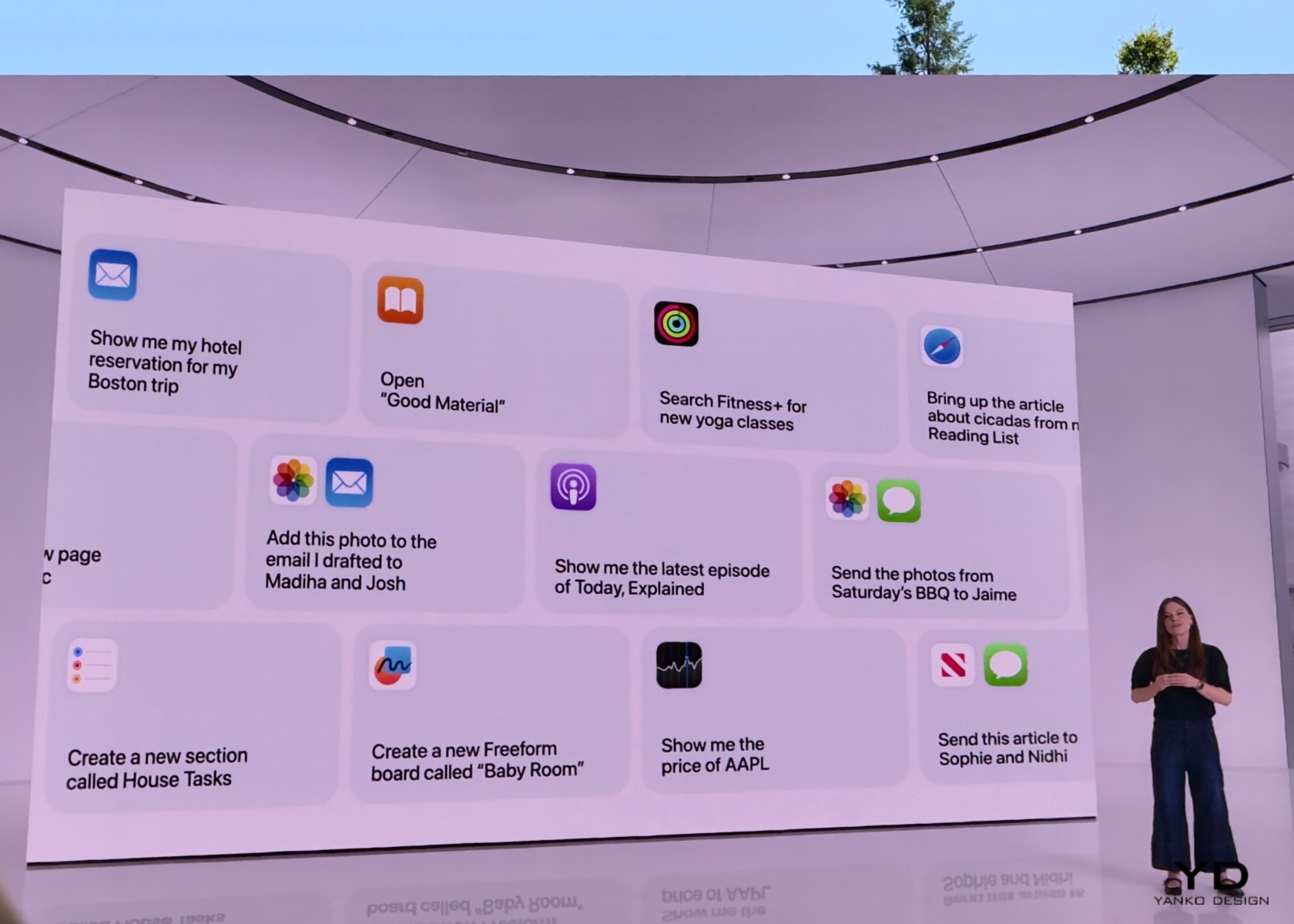

6. Siri Enhancements

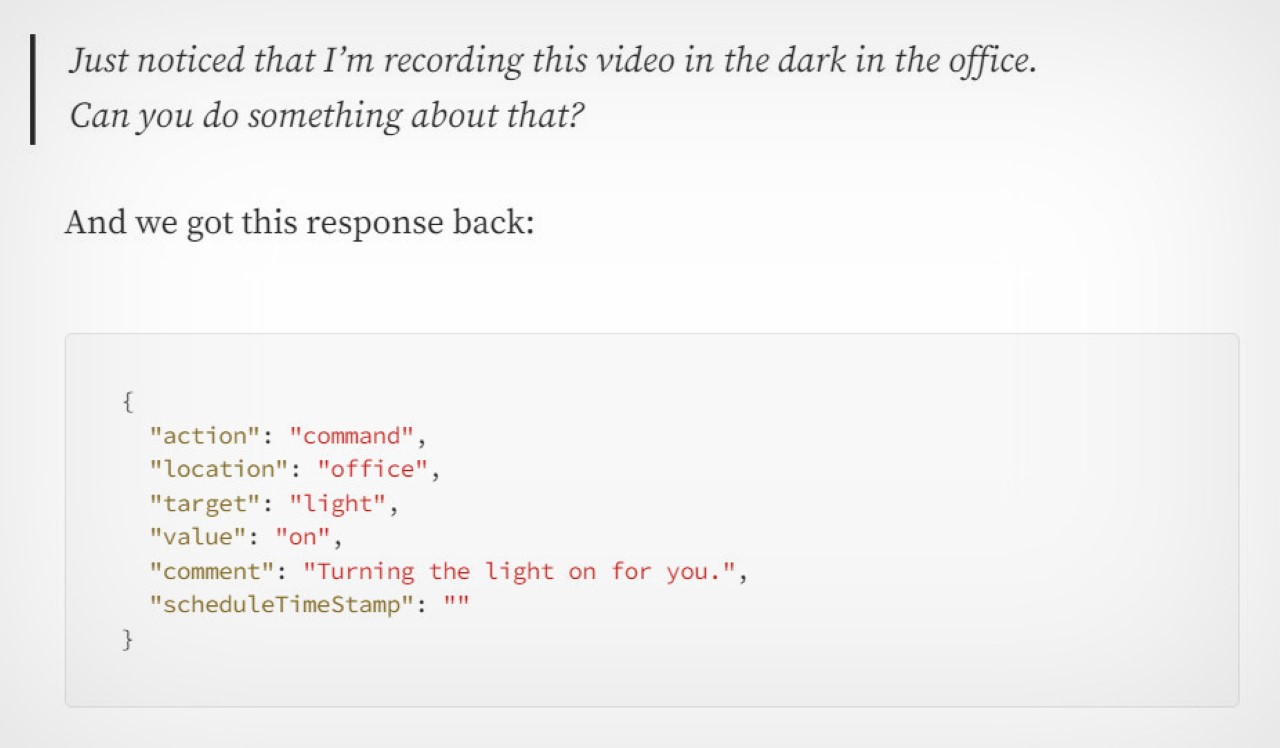

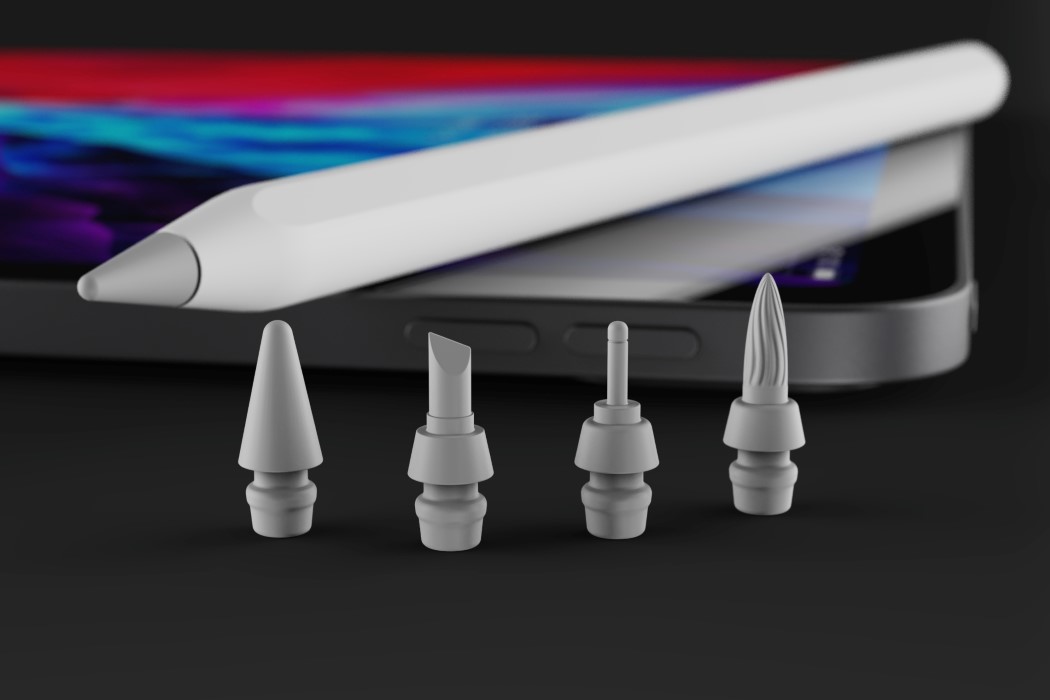

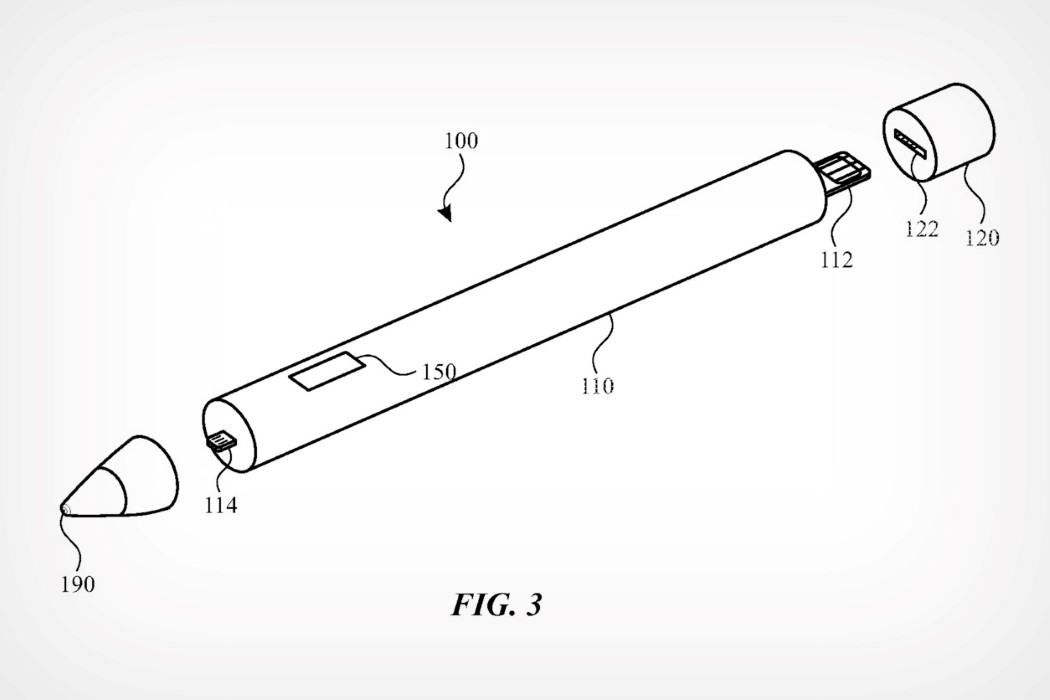

Powered by Apple Intelligence, Siri is now more capable and contextually aware than ever before. This transformation means your assistant can understand and act on a wider range of requests, making it even more useful in your daily life. Whether you need to schedule an email, switch from Light to Dark Mode, or add a new address to a contact card, Siri can handle these tasks with ease. It can follow along if you stumble over words and maintain context from one request to the next, providing a more seamless interaction.

One of the most exciting improvements is Siri’s ability to perform hundreds of new actions across Apple and third-party apps. For instance, you can ask Siri to bring up an article from your Reading List, send photos from a recent event to a friend, or play a podcast recommended by a contact. The new design includes an elegant glowing light around the edge of the screen when Siri is active, making it visually engaging and easier to know when Siri is listening.

Siri now pulls from your messages, emails, and other apps to provide more personalized assistance. If a friend texts you their new address, you can simply say, “Add this address to his contact card,” and Siri will update the contact information without needing additional input. Additionally, Siri can understand and follow up on previous requests, making it easier to carry on more natural, conversational interactions. Imagine planning a day trip; you could ask about the weather at your destination, book a reservation at a local restaurant, and get directions—all in a seamless flow of conversation.

Another significant enhancement is Siri’s deep integration with personal context. For example, you can ask, “What’s the weather like near Muir Woods?” and then follow up with, “Create an event for a hike there tomorrow at 9 a.m.” Siri maintains the context of “there” to schedule the hike without needing you to repeat the location. The assistant also supports switching between text and voice input seamlessly, which is perfect for situations where speaking aloud isn’t convenient.

These improvements are crucial for making technology more accessible and user-friendly. By expanding its capabilities and making it more contextually aware, Apple Intelligence ensures that users can rely on Siri for a wider range of tasks. This can significantly enhance productivity and convenience, making it easier to manage daily activities and access information quickly. For anyone looking to streamline their workflow or simplify their interaction with technology, these enhancements make Siri an even more integral part of everyday life. Whether you’re coordinating a busy schedule, managing home automation, or simply trying to stay organized, the new capabilities make your digital assistant more indispensable than ever.

Conclusion

Integrating deeply with personal context, Apple Intelligence redefines how we interact with our devices. It understands your routines, preferences, and relationships, offering solutions that feel tailor-made just for you. With most tasks processed on your device, it keeps your data secure while boosting productivity through advanced writing tools, priority notifications, and real-time transcription. Fun features like Image Playground and Genmoji bring a personal touch to visual communication, while enhanced photo and video search make managing digital memories a breeze. Siri’s enhanced abilities enable smooth, conversational interactions, making everyday tech use more intuitive and efficient, all while upholding strong privacy standards. I hope this helps answer and explain what Apple Intelligence is, what the features are, and how you can benefit from them.

The post Apple Intelligence at WWDC 2024: The Future of Personalized and Secure AI first appeared on Yanko Design.